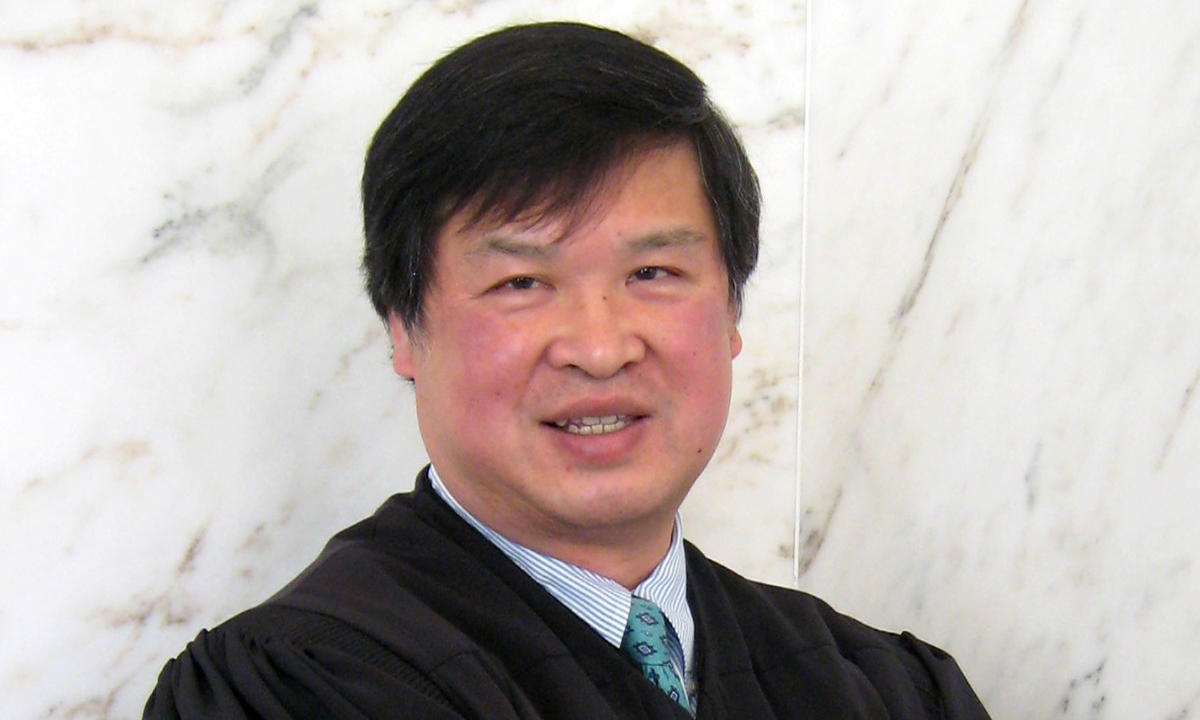

In a fireside chat-style discussion, Vanderbilt Law Professor J.B. Ruhl discussed AI utilization in law and its implications for future lawyers with the John Nay, a Visiting Scholar on AI & Law, a Fellow at Stanford University’s Center for Legal Informatics (CodeX), and the CEO at Norm Ai.

Large Language Models and Litigation

Ruhl kicked off the discussion by unpacking the components of large language models, such as parameters and tokens in training, and their significance in litigation, versus machine learning and natural language processing.

Nay highlighted statistical models characterized by layers of parameters, further influenced by Structured Machine Learning (SML). The term “language model” encapsulates the manipulation of language data, particularly text data. This involves the aggregation of extensive text data and its transformation into tokens, serving as units of meaning, albeit not confined to words alone. Consequently, a multifaceted technological framework emerges, incorporating underlying models, software companies utilizing them, and end users.

Nay noted a significant governance challenge stemming from this complexity: determining accountability in the event of issues. “Who is responsible when things go wrong?” he asked. Nay anticipates a surge in regulatory and litigation challenges in the forthcoming year.

AI Usage for Emerging Lawyers

Nay suggests that future lawyers engage in prompt engineering, which involves interacting with platforms like ChatGPT-4 to test various legal tasks and questions. By experimenting with different approaches and observing the qualitative outcomes, one can gain valuable insights into how to effectively steer the model. Nay emphasized that this hands-on practice, alongside traditional methods, can significantly enhance one’s skills and readiness for the evolving legal landscape.

Ruhl underscored the accessibility of AI tools for non-coders, likening prompt engineering to familiar tasks such as refining searches in Westlaw. He stressed the importance of understanding basic concepts like tokens and training while focusing on honing prompt engineering skills. Ruhl also highlighted the versatility of prompt engineering in legal contexts, from simulating depositions to critiquing briefs, mirroring the collaborative processes already integral to legal practice.

Ultimately, both Nay and Ruhl encouraged attending students to engage proactively with AI technologies to advance their legal proficiency and adaptability.

The Threat of AI Misuse in Law

Concerns about the potential displacement of human roles by AI, particularly within the legal sector, are heightened by the looming threat of overuse and misuse of this technology. The legal industry finds itself in a landscape where powerful tools are increasingly available, prompting a careful consideration of how these advancements empower legal practitioners and the extent of their impact. Nay described the significance of leveraging the evolving capabilities of AI models to bolster productivity and provide enhanced value to clients. As technology progresses, legal practitioners need to embrace these advancements and incorporate them into their workflows.

However, Nay also cautioned against overlooking the irreplaceable aspects of human judgment. “While AI can augment certain tasks, the essence of human expertise remains indispensable,” he said, “particularly in providing trusted legal counsel and navigating sensitive client matters.”